English Assessment Report 2023 - 2024

Academic Year Assessed

2023 - 2024

Program(s) Assessed

Major: Department of English Literature Option

Minors, Options, etc.: English (non-teaching) Literature

- Past Assessment Summary. Briefly summarize the findings from the last assessment report conducted related to the PLOs being assessed this year. Include any findings that influenced this cycle’s assessment approach. Alternatively, reflect on the program assessment conducted last year, and explain how that impacted or informed any changes made to this cycle’s assessment plan.

The latest 2021-2022 Assessment of the English Literature Major/Minor assessed all three learning outcomes (reading/writing/research), but one of the things learned from this past assessment is that it is better to assess only one learning outcome each year, in order to focus more specifically on a single criterion at a time and in more depth. Consequently, this year we only assessed the first reading PLO. Our last assessment determined that 75% of our students met or surpassed Level 3 competency (our department threshold for PLOs) for all three PLOs in the three classes we assess: LIT 201 (Introduction to Literary Studies), a 300-level literature class, and LIT 494 (Senior Capstone). We also found a (not surprising) increase in competency as students progressed from lower-division to upper-division coursework, and we learned from this assessment that it is was indeed wise to assess PLOs across the beginning, middle, and end of students’ careers, so we have kept this practice. We expected to see this recurring pattern of increasing competency across years again, as we did. Ultimately, we found practices that we both chose to change and to retain from our previous assessment.

- Action Research Question. What question are you seeking to answer in this cycle’s assessment?

Our Action Research Question was “Are students effectively using their reading skills to make meaningful arguments about the texts they are reading?” While the question of meaningfulness is perhaps somewhat subjective, experienced English professors can accurately assess when a student is just fulfilling the assignment and when they have gone “beyond” the assignment to explore something that is either meaningful to them personally or to the larger world around them. There is a distinct difference in tone and level of engagement between more and less meaningful papers. We chose this question because we want to see that our students are not just developing technical skills, but that they are also putting those skills to work to meaningfully engage their world in their papers. We want to see that our students have not just something to say, but something important to say. It is not coincidental that our Action Research Question is also supposed to be meaningful, and that we chose to analyze the meaningfulness of our students’ writing. All the work we do at the university should rise to this level of meaningfulness. At the same time, we also wanted to assess that are students were using their reading skills (a PLO) to effectively express the meaningfulness of well-developed arguments (another PLO). In short, we wanted to assess not only if they achieved the PLOs, but also that they did so in a meaningful way. The thing that we really wanted to improve upon by asking this question was to see if our teaching and writing assignments were truly engaging the students rather than just providing an academic hoop to jump through. We were impressed to find that the overwhelming majority of the papers did seem to engage the material in a meaningful way as evidenced by both the high numeric scores and the positive narrative comments. I believe that by focusing on a single PLO we were also able to develop a much more effective Research Action Question this year. This is another thing that I believe we improved over our previous assessment.

- Assessment Plan, Schedule, and Data Source(s).

- Please provide a multi-year assessment schedule that will show when all program learning outcomes will be assessed, and by what criteria (data).

| ASSESSMENT PLANNING SCHEDULE CHART | |||||

| PROGRAM LEARNING OUTCOME | 2023 - 2024 | 2024 - 2025 | 2025 - 2026 | 2026 - 2027 | Data Source* |

| #1 Reading | X | X | Below | ||

| #2 Writing | X | Below | |||

| #3 Research | X | Below | |||

The specific details of each learning outcome is given in the chart

below as are the specific data sources that was used in the assessment.

- Data Sources

- Three different professors selected an appropriate amount of data (from approximately 25% of the students in each course) according to the curricular goals and assignments for their class. These data may include long or short student papers, exams, writing journals, web pages, class presentations, etc. Each year these data are taken from the Senior Capstone class (LIT 494), a three hundred level class, and our introduction to the major, Introduction to Literary Studies (LIT 201). This year we specifically examined 2 long research papers from LIT 494, a class of 8 students, and 5 shorter papers from LIT 308 and LIT 201, classes of about 20 students. These data were selected by the professors as representative of the primary work done by the students in each class. While all the data were papers of some sort this is because the primary and most representative work done in almost all literature classes is writing papers. All three professors concurred that papers were the best measure of their students’ abilities for their courses this year. Since students take the same courses for the major and the minor we used the same data to evaluate both programs.

- Threshold Values

-

We expect 75% of the students to achieve a three or higher on the grading rubrics provided for the PLO. Students successfully met this threshold in all three classes. In the LIT 494 (Research Capstone) students received (4 scores of 4 and 2 scores of 3), LIT 308 (Multicultural Literature) students received (1 score of 2, 3 scores of 3, and 11 scores of 4), while LIT 201 students received (1 score of 2, 7 scores of 3, and 7 scores of 4) These scores average to LIT 494 (3.66), LIT 308 (3.66), and LIT 201 (3.4). Given that the same rubric was used to assess both lower-division and upper-division courses, it is not surprising that we saw some improvement in the upper-division classes, though scores remained strong throughout.

-

What Was Done

Self-reporting Metric: Was the completed assessment consistent with the program’s assessment plan? If not, please explain the adjustments that were made.

Yes

How were data collected and analyzed and by whom? Please include method of collection and sample size.

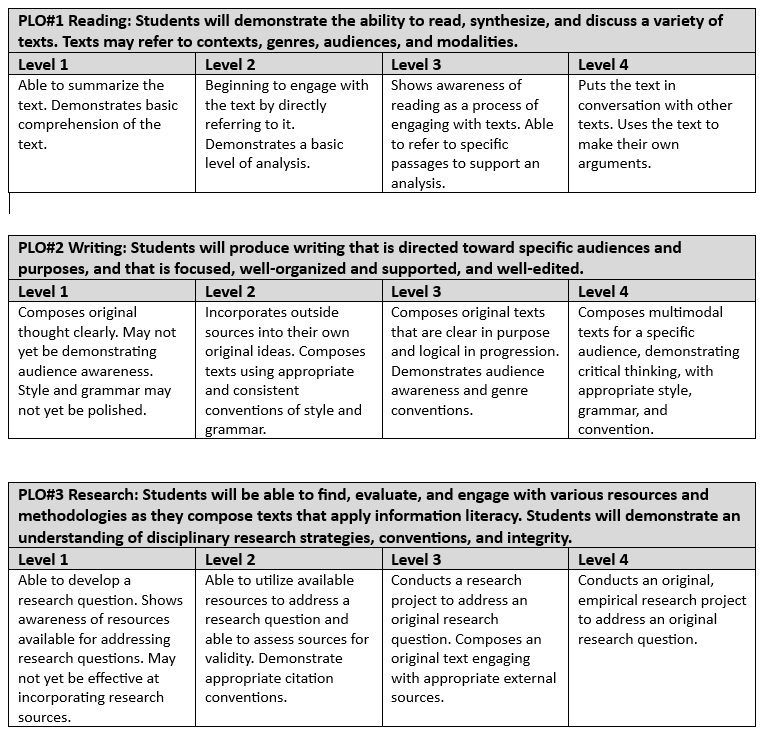

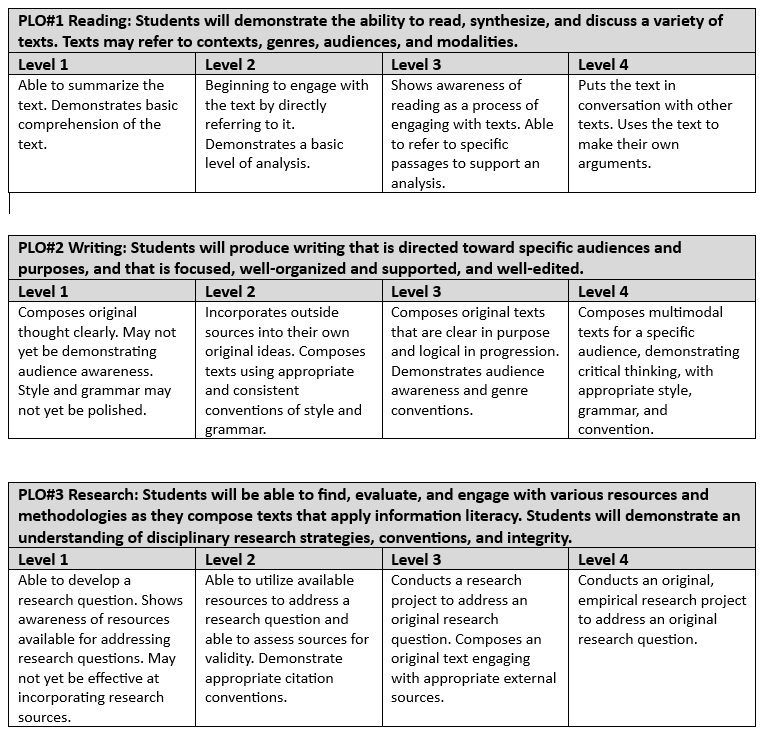

The Rubrics for our three PLOs are included below. This year we assessed class papers from three courses—LIT 494, LIT 308, and LIT 201—according to the rubric for PLO #1 (Reading).

Please provide a rubric that demonstrates how your data were evaluated.

- Methodology

- According to our plan, three faculty from the literature option were chosen to assess the papers, two of whom were also on the assessment committee and familiar with the department’s process of assessment. Each professor read all the papers, giving them both a score according to the assessment rubric and narrative comments explaining the score. The narrative evaluations were important for understanding and contextualizing the numeric scores, giving us a broader understanding of the strengths and weakness of the students’ papers.

What Was Learned

Based on the analysis of the data, and compared to the threshold values established, what was learned from the assessment?

Note: While we are focusing on research in particular, which is the last to be discussed here, our conversation was much broader and we have included some of the notes from that below.

Our numerical assessments according to the rubric showed that students are generally accomplishing our expected PLO, and narrative assessments concurred, giving us more in-depth information of how our students are achieving this PLO. Comments include: “cites numerous sources,” “examines the evolution of Robin Hood in various adaptations,” “offers citations from the texts,” “uses quotes from the novel and from critics and scholars,” “shows an indication that horror as a genre has a long history that has been reshaped and reformed for various cultural purposes.” This shows that students are able to meet both PLOs of “citing appropriate passages” and “putting the text in conversation with other texts.” The majority of what we learned was a confirmation that students are generally achieving this PLO at the expected levels of competency. This achievement demonstrated that teaching these abilities is one of our department’s strengths.

In addition, many of the narrative comments also show that we are already doing a good job of encouraging students to write meaningful papers. For example, comments about papers include “understands how literature may productively explore the concept of trauma from diverse perspectives,” “shows an awareness of how cultural texts can help reshape our thinking and attitudes,” “this is an ambitious and unexpected essay, and this student has worked hard to produce a coherent and surprising paper,” and “this essay grapples with some big ideas.”

A few comments, however, showed that some papers still need to improve the PLO skills themselves, including “there are a lot of assumptions/warrants built in that go unexplained or unaccounted for,” “not a lot of textual citations,” and “analytical skills could use improvement.” This shows that we still need to keep working on improving the fundamental PLO skills in a few cases.

More importantly, a few comments suggested that we need to work harder to help students’ use their skills to write meaningful papers. Notably, these comments were made primarily about the 2 texts that scored a 2 on the rubric. For example, “This essay is very ‘pat’ . . . without ever building to anything more substantial” and “engages several texts, but only in a vague and superficial manner.” So, we learned that students’ poorest writings are also the least meaningful papers, which shows that our research question about meaningful papers is a meaningful measure of student success. Even more important than the very few papers that were judged to be pat or superficial we would have liked to see even more comments on the good papers that specifically noted their meaningful engagement with the material. So even if we only have a few students who are failing in this regard, we still learned that this is a worthwhile goal that we can work on improving to have students more consistently achieve in more papers.

In summary, we learned that our PLO is a good measure of fundamental skills, and that while our students are generally achieving this goal there is always room for improvement. More importantly, our Action Research Question helped us focus on how we would like to encourage our students to use their fundamental technical skills to write more meaningful papers—another measure of success which we would like to see more uniformly among a greater number of student papers.

How We Responded

Describe how “What Was Learned” was communicated to the department, or program How did faculty discussions re-imagine new ways program assessment might contribute to program growth/improvement/innovation beyond the bare minimum of achieving program learning objectives through assessment activities conducted at the course level?

The literature professors who conducted the assessment will meet with the literature option professors in our monthly meeting to discuss the results of our assessment. In addition, we will discuss the findings with the full department at an upcoming November or December faculty meeting. In particular, we will emphasize both the need to keep working on teaching our students the fundamental PLO skills and especially encourage them to help students use these technical skills to write more meaningful papers. We plan to discuss in depth what different professors consider to be a meaningful paper and how we can best help our students to write such papers, especially in upper-division and capstone courses after they have learned the more primary technical skills in lower-division classes. We will discuss how the curriculum and teaching strategies can be improved to meet these goals. We also plan to help prepare professors for next year’s assessment by encouraging them to specifically teach writing skills as well as reading skills in all their literature classes.

Closing the Loop

While past assessments have focused narrowly on the technical skills assessed by our PLO for Reading, our Action Research Question this year enabled us to put these skills in a broader context that explored how these skills were put into action to write meaningful papers. We believe that learning this and sharing it with the literature option faculty and the full department, will significantly improve our students’ work in the future, especially since we found a strong correlation between students’ ability to write meaningful papers and their achievement of the core PLO skills. We believe that focusing on this will have a transformative effect both on how our professors teach their classes and on our students’ experiences writing papers.